Web special of the cover story of Fraunhofer magazine 4.2020

Next Generation Computing

Tabbed contents

“The future of computing is hybrid”

Not only in terms of health 2020 has set a turning point. The year has also seen unprecedented data usage. In October, market analysts at the VATM (Trade Association of Telecommunications and Value-Added Services Providers) estimated that this year, 5.2 billion gigabytes of data will be transmitted across the German mobile radio networks alone – an increase of 52.9 percent on the previous year. Just five years ago, mobile data traffic amounted to just on 600 million gigabytes. In 2020, an estimated 72 billion gigabytes are whooshing through Germany's fixed network lines – that’s an increase of 28.6 percent.

According to the Federal Statistical Office, some 33 zettabytes of digital data were generated worldwide even in 2018. Statisticians are predicting more than a five-fold increase to 175 zettabytes for the year 2025. That's 175,000,000,000,000,000,000,000 bytes. As a comparison: All of Shakespeare's works, as once calculated by the Computer Weekly magazine, comprised 5 MB, or 5,000,000 bytes in total. The next, and last, known unit is the yottabyte. And our digital society is moving ever closer to this limit.

Especially sensor data from the Internet of Things – besides video streaming – will drive forward data growth in the future. Artificial Intelligence, Industry 4.0, autonomous driving. All of these digital developments need increasing amounts of data, and that means more computing power and energy. In 2020, some 80 percent of all data generated were still processed in centralized systems and 20 percent in systems at the edge. It is likely that this ratio will have reversed by 2025. And as we watch the data mountain grow, we approach the limits of our existing computer technologies. Soon, they will barely be able to meet the new requirements for energy consumption, data processing and transfer times.

And the torrent of data is not the only challenge. As we become more and more reliant on digital networks and data, the demands for a secure and resilient digital society grow. Especially technological sovereignty, meaning the self-determination and control over systems and data, is playing a key role in Germany and in the EU. Until now, the market power of American IT giants like Microsoft and Google in particular has led to almost complete dependencies.

“To successfully rise to these challenges, we need to develop trusted, high-performance and resource-efficient hardware and software,” says Prof. Albert Heuberger, Head of the Fraunhofer Institute for Integrated Circuits IIS in Erlangen. Together with his colleague Prof. Anita Schöbel from the Fraunhofer Institute for Industrial Mathematics ITWM in Kaiserslautern, he is responsible for Next Generation Computing, an area the Fraunhofer-Gesellschaft has defined as one of seven strategic research fields. In the computer center of the future, there will be more ways to solve problems. As Heuberger explains: “The future is in hybrid, secure computer technologies that can be used in their own right or as a complement to other solutions, depending on the nature of the problem. We investigate which approach would be best for which problem – and adapt the architecture to the application concerned.” Three technologies are key to Next Generation Computing: neuromorphic hardware, trusted computing and quantum computing.

Neuromorphic computing

Neuromorphic computing

Our brain is an incredible phenomenon. It can process and store huge amounts of information. While doing so, it uses no more energy than a 20 watt light bulb. The neural networks, to which millions of nerve cells connect using synapses, are permanently flexibly adapting to new learning processes and experiences. This plasticity makes the human brain more than just a paradigm of efficiency. It is superior to today's AI systems, in the main highly specialized and inefficient.

Researchers have taken this success model, the special structure of the brain, as their archetype for mapping to the circuits of neuromorphic chips. “Neuromorphic hardware is a new approach to design,” explains Dr. Loreto Mateu who is coordinating neuromorphic activities across several projects at Fraunhofer IIS. Neural networks are being used as algorithms for integrated circuits in order to imitate neurobiological architectures. And here's the most exciting thing: “Data are processed in parallel in distributed memories, not centrally like in conventional central processing units (CPUs). So there's less transfer between memory and CPU, such that the job can be done much faster and more efficiently by neuromorphic chips than by current processors.”

In Erlangen, Munich and Dresden, but at other locations too, researchers at Fraunhofer IIS, the Fraunhofer Research Institution for Microsystems and Solid State Technologies EMFT and the Fraunhofer Institute for Photonic Microsystems IPMS are developing new neuromorphic systems for semiconductor chips that are set to allow neuromorphic computing on mobile, battery operated devices directly. “This will do away with the time-consuming transfer of data between processor and memory, and that in itself will minimize the power consumption for complex computing and transfer procedures, and reduce the latency times as well,” explains Dr. Mateu. So the technology could prove to be an asset for AI applications in particular. Such applications currently need huge amounts of energy because machine learning involves parallelizing tasks in a complex matrix multiplication operation, and fast reaction times are required as well.

“If the information is saved and processed in the system itself, a neural processing unit in a smartphone for example, the energy efficiency of such applications increases,” the researcher explains. Her colleague Dr. Thomas Kämpfe at Fraunhofer IPMS, also working on the technology, adds: “According to circuit simulations, we can perform individual computing operations with a latency of just one nanosecond. In terms of energy-saving potential, this makes us 100 to 1000 times more efficient than established AI hardware, and compared to conventional hardware, the gap is even wider. The low latency of the individual operations means that even very deep neural networks can be computed in realtime.”

This technology, then, has what it takes to tackle one of the most pressing challenges of digitization: the enormous energy consumption of computer centers. As early as 2014, the IT sector was producing as much CO2 worldwide as the aviation industry as a whole, reports the German Federal Environment Agency. A study recently presented by the EU commission shows that the energy consumption of computer centers in EU member states will rise from 2.7 percent of the energy demand in 2018 to 3.2 percent by 2030. “As well as looking at local data processing, we are designing the electronics with intelligent standby and low-power circuit architecture to ensure minimum energy consumption,” explains Dr. Mateu. And she reveals: “The first chips with a neuromorphic design are set to go into production in 2021.”

And in the EU-funded NeurONN project, Fraunhofer EMFT is working with six European partners to develop a neurologically inspired computer architecture not based on common silicon technology. In this architecture, information is encrypted in the phase of coupled oscillating elements that are interconnected to form a neural network. “Just like the brain, the two key components in neuromorphic computing are called neuron and synapse – they replicate the distributed computing and memory units,” explains Dr. Armin Klumpp, Project Manager at the Fraunhofer Research Institution. “The neurons used in the project are novel elements based on vanadium dioxide, which can be 250 times more efficient than state-of-the-art digital oscillators. The synapses are memristors – a word made up from memory and resistor – based on innovative 2D-dichalcogenide materials.” In terms of switching speed, lifespan and energy consumption, these minute elements are set to be up to 330 times more efficient than current technologies. The neuromorphic chips will be used wherever energy efficiency and low latency times are key, because devices may be battery-operated or there's no time left to send data to the cloud and wait for a response. For example, to analyze biosignals during an ECG or EEC, as “electronic noses” for gas and odor detection, when processing signals for voice recognition or anomaly detection, or for hearing aids. Signal processing by mobile and portable sensor systems can also be much more energy-efficient when processing sensor data – relevant for autonomous driving, in satellite applications, predictive maintenance or condition monitoring in Industry 4.0. One major advantage of neuromorphic hardware is that information is stored locally, not in a cloud, making the devices more secure and improving the degree of data protection. Last but not least, neuromorphic chips provide the basis of Edge AI applications

Trusted Computing

Trusted Computing

Trusted computing is the second element of Next Generation Computing and the one which, technologically, has flourished the most. “Trusted electronics and data security form the basis of all digital, networked systems, for the Internet of Things in particular, but also for AI,” says Prof. Albert Heuberger from Fraunhofer IIS.

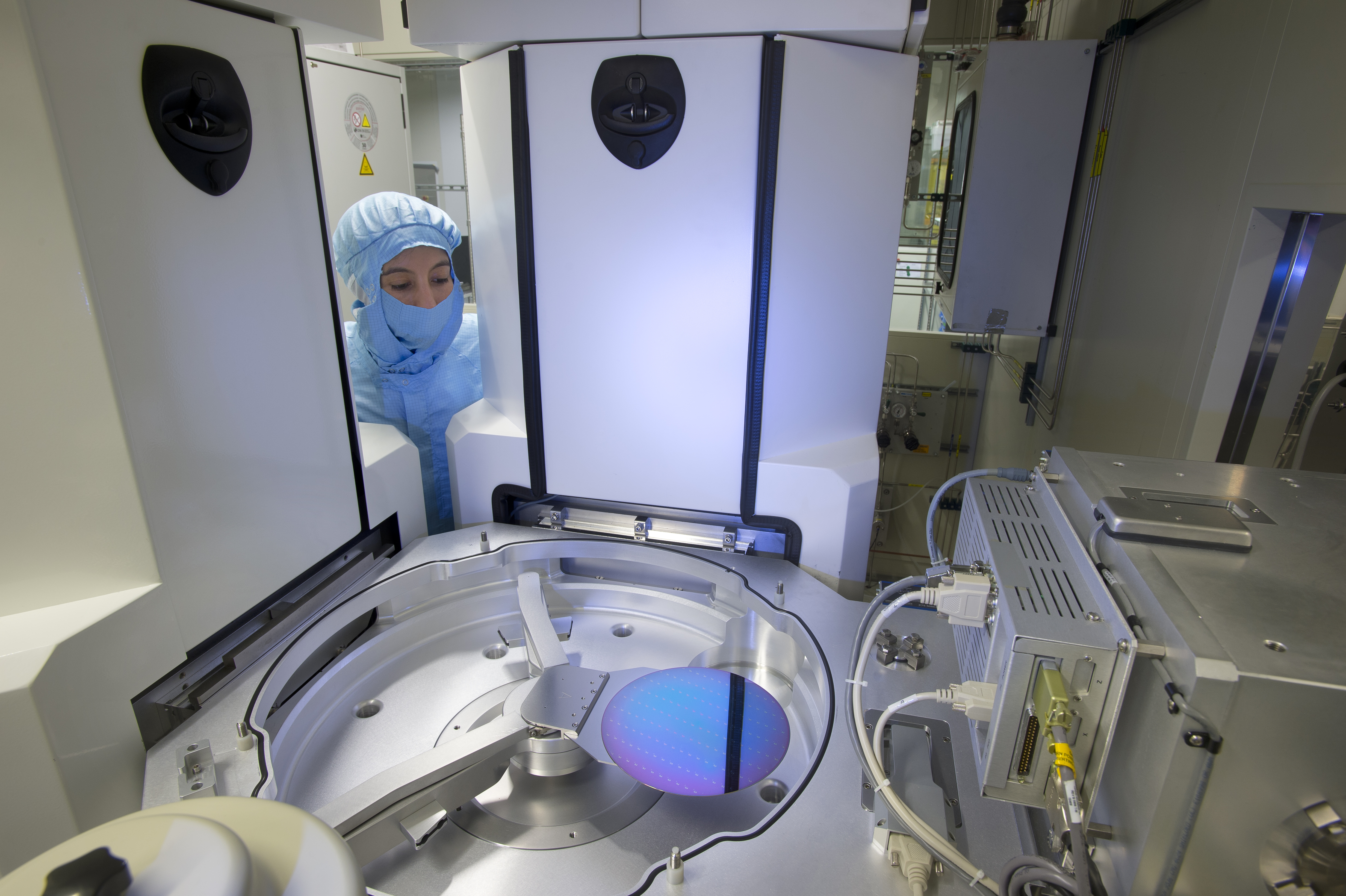

Especially wherever personal or security-critical data are processed, like for medical engineering and autonomous driving purposes, or where infrastructures are critical, it is essential for owners to have complete control over their ICT systems and for users to be informed about the properties of the systems they're using. The entire data flow – from the end customer to the actual hardware that does the data processing – has to be considered. Time and time again in the past, hardware in particular had security vulnerabilities that allowed sensitive information to be read. Only in spring 2020 did it become a known fact that many Intel and AMD chips were permitting unauthorized access to protected data. The fault was in the design of the processors. Vulnerabilities can also often be undocumented interfaces or implementation faults. “Trusted computing, then, is not only about fail-safe hardware and trusted software. It comprises, starting with secure semiconductor production, non-readable memory contents and secure identities of computers through to the secure embedded systems,” explains Prof. Heuberger.

Dr. Patrick Bressler, head of Central Office, the Fraunhofer Group for Microelectronics, addresses another crucial issue: “Many critical components of digital technologies are today produced outside Europe, and foreign suppliers hold quasi-monopoly market positions in many areas of the digital value added chain. This creates a heavy reliance, and it could be used to Germany's disadvantage.” As also recognized by the politicians: The “Trusted electronics – Made in Germany” initiative, financed by the German Federal Ministry of Education and Research (BMBF), will develop and apply the relevant standards, norms and processes on the basis of a national and European chip security architecture. The declared aim: to strengthen Germany's technical sovereignty over the long term.

In the TRAICT innovation program (TrustedResource- Aware ICT), 18 Fraunhofer Institutes work together to create framework conditions to ensure that information and communication technology is trusted and compliant, and can be used in a self-determined and secure way. The key question is: How can the reliability of critical electronic components and systems in globally interwoven supply and value-added chains be validated and guaranteed?

The higher-level system in TRAICT is a 5F mobile radio scenario, which can also be transmitted to other applications. The participating research teams are examining system architectures and their components for their trustworthiness and energy efficiency. They want to make it easier for users to better examine the hardware, and they do this through a transparent design and disclosed specifications. The aim is to maintain an overview of exactly what is implemented and how, and hence of functionality monitoring.

The use of open-source platforms, which offer more transparency and allow user-specific modification and precise examination of the design, plays a key role here. An ecosystem with open-source RISC-V processors will allow companies to build their own hardware, even in small quantities. The project also investigates analysis methods for chips in order to uncover any unwanted functions, such as hardware Trojans for example. It also seeks to optimize energy efficiency – both locally in components and assemblies, using new semiconductor materials and so on, and also with the distributed computing strategy in the system through predictive maintenance or AI.

Quantum computing

Quantum computing

The third pillar of future computer architecture is quantum computing. It is this technology more than any other that has become the benchmark for high hopes in recent years. Many states are promoting the research with billions of euros, large companies and start-ups are competing for the qubits. Quantum computers are expected to take just seconds to solve problems for which computers today need years. Quantum-based computers are much faster than their normal counterparts because their information units are elementary particles, like electrons or photos, with quantum entanglement: Qubits.

We can think of qubits as rotating particles that decide on a rotational direction or polarization only when measured. Until such time as they are measured, they remain in a mixed state, called a superposition. As a result of this effect, the individual quantum states can not only have a value of 0 or 1, like conventional bits, they can also have any combination of the two. In this superposition, the particles can be entangled with one another and used like this for logical computing operations. This way, complex tasks are computed in parallel rather than in linear fashion. Adding one qubit doubles the system’s performance.

“But quantum computers are not expected to be able to solve every problem,” warns Anita Schöbel, Head of Fraunhofer ITWM. “Success hinges on having an algorithm which is able to use the effects of quantum mechanics. An exponential improvement of quantum computing in contrast to classic computing is possible in some applications, but for most applications this is still under research. We are in the process of investigating what kind of problems we can better solve in future with quantum computers and which using alternative architectures.”

To find the answers, Fraunhofer has recently collaborated with IBM to bring a quantum computer, the IBM Q System One, to Germany – the first of its kind in Europe. The fragile freight arrived in Germany by ship in November. The quantum computer is currently being installed and is scheduled to go into operation in Ehningen in Baden-Württemberg in January 2021. “The aim is to test the first applications directly. That way, we will be expanding not only our in-house expertise, but those of the German economy as a whole. We now need access to the quantum computer, to enable us to build and operate the next generation,” Prof. Oliver Ambacher is convinced. He is head of the Fraunhofer Institute for Applied Solid State Physics IAF in Freiburg and one of the spokespersons for the Fraunhofer Competence Center Quantum Computing. The Center was established as a central point of contact for anyone wanting to research on and with the quantum computer together with Fraunhofer Institutes.

- Kick-off for the EU project NeurONN (Press information Fraunhofer EMFT Febr 19, 2020) (emft.fraunhofer.de)

- Innovation program TRAICT – Trusted Resource Aware ICT (idmt.fraunhofer.de)

- EU-Project SEQUENCE – Cryogenic 3D Nanoelectronics (iaf.fraunhofer.de)

- IBM and Fraunhofer bring Quantum Computing to Germany (Fraunhofer Press Release March 13, 2020) (fraunhofer.de)

- EnerQuant: Quantum Computing for Energy Economics (Press Release Fraunhofer ITWM Oct. 28, 2020) (itwm.fraunhofer.de)

Further projects

Neuromorphic Computing

- Memristors for the computers of tomorrow / Fraunhofer ENAS (enas.fraunhofer.de)

- Neuromorphic Hardware / Fraunhofer IIS (iis.fraunhofer.de)

- Power-saving chips for neuromorphic computing / Fraunhofer Fraunhofer EMFT, IIS, IPMS: Tempo Project (emft.fraunhofer.de)

- “Energy-efficient AI system”: ADELIA Project / Fraunhofer IIS, IPMS (iis.fraunhofer.de)

Trusted Computing

- Project USeP (Fraunhofer IIS, Division Engineering of Adaptive Systems EAS) (eas.iis.fraunhofer.de)

- Lighthouse project "ZEPOWEL – Towards Zero Power Electronics" / Fraunhofer IZM (fraunhofer.de)

- MASSTART Project / Fraunhofer IZM (izm.fraunhofer.de)

Quantum Computing

- SEQUENCE - Cryogenic 3D Nanoelectronics / Fraunhofer IAF (iaf.fraunhofer.de)

- EnerQuant – Quantum Computing for the Power Industry/ Fraunhofer ITWM (itwm.fraunhofer.de)

- PlanQK – Platform and Ecosystem for Quantum-assisted Artificial Intelligence / Fraunhofer FOKUS

- Quantum computing/ Fraunhofer SCAI (scai.fraunhofer.de)