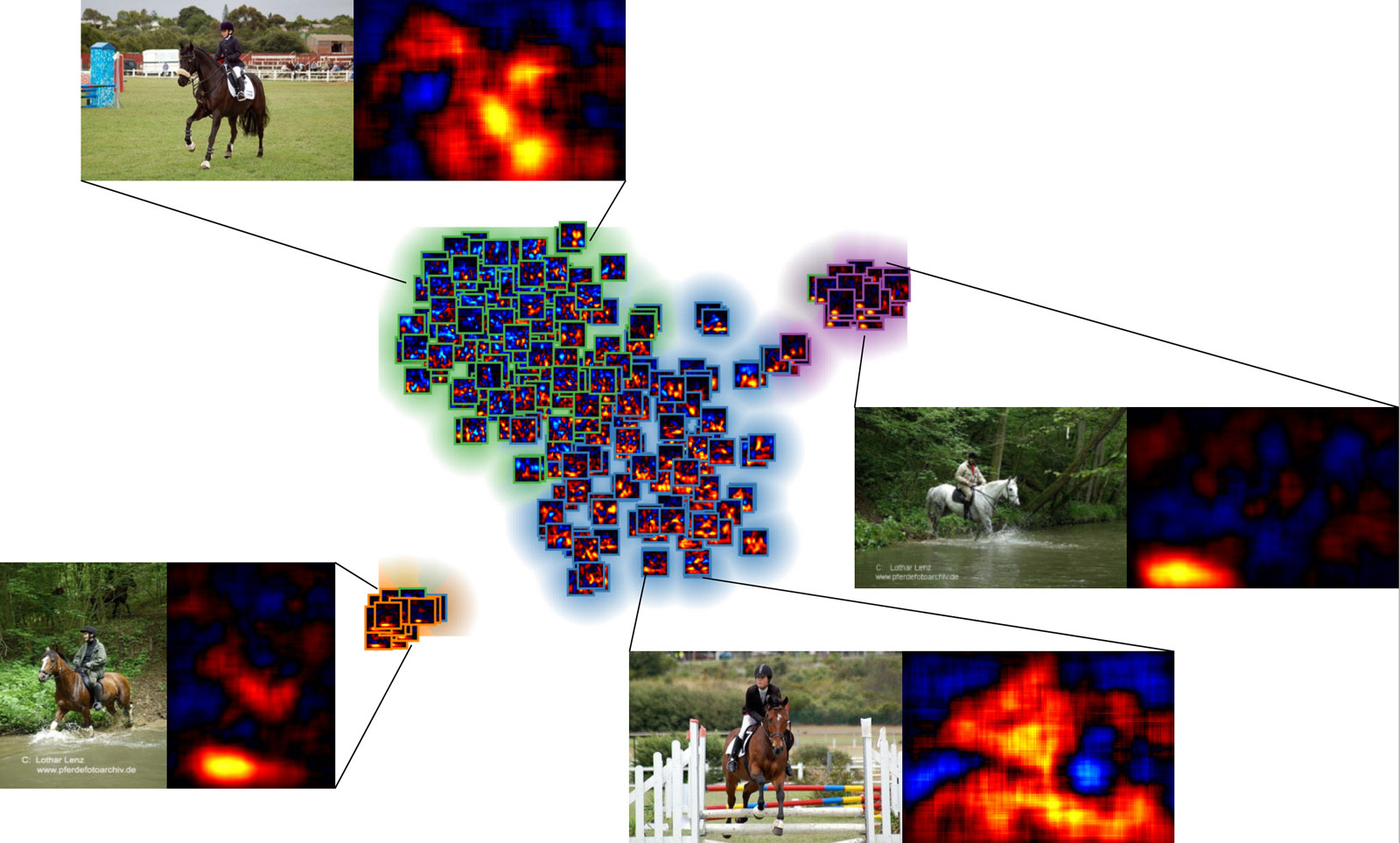

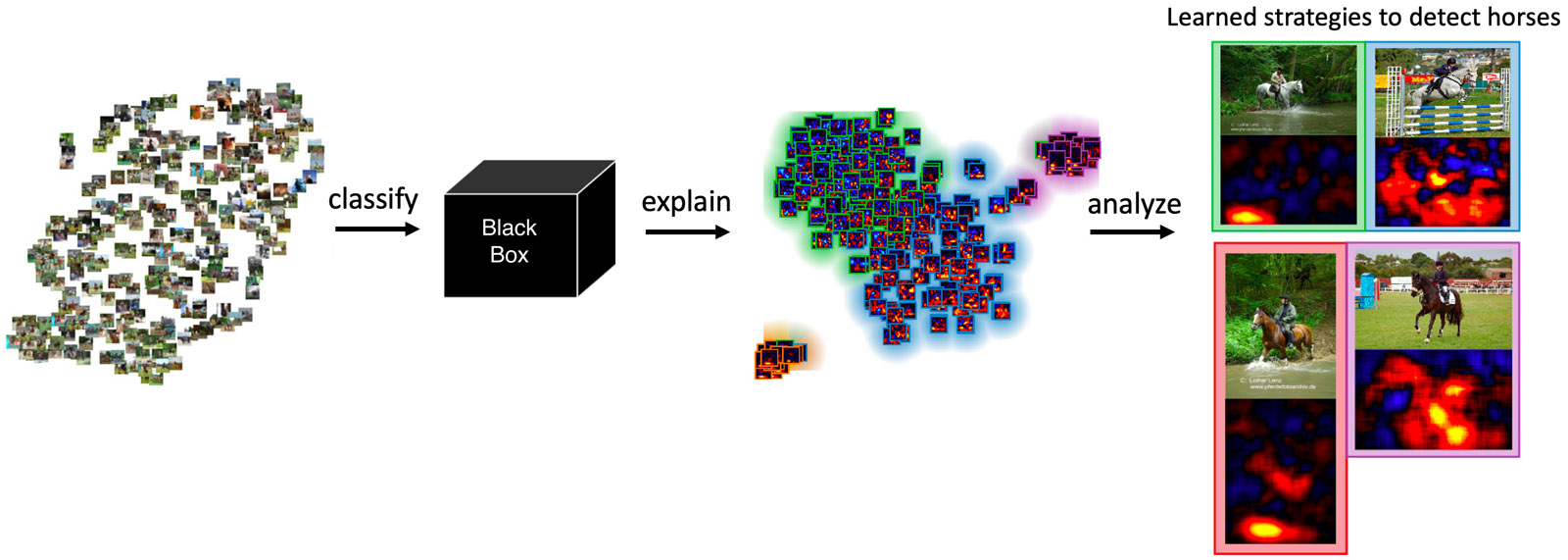

Today it’s almost impossible to find an area in which artificial intelligence is irrelevant, whether in manufacturing, advertising or communications. Many companies use learning and networked AI systems, for example to generate precise demand forecasts and to exactly predict customer behavior. This approach can also be used to adjust regional logistics processes. Healthcare also uses specific AI activities, such as prognosis generation on the basis of structured data. This plays a role for example in image recognition: X-ray images are input into an AI system which then outputs a diagnosis. Proper detection of image content is also crucial to autonomous driving, where traffic signs, trees, pedestrians and cyclists have to be identified with complete accuracy. And this is the crux of the matter: AI systems have to provide absolutely reliable problem-solving strategies in sensitive application areas such as medicinal diagnostics and in security-critical areas. However, in the past is hasn't been entirely clear how AI systems make decisions. Furthermore, the predictions depend on the quality of the input data. Researchers at the Fraunhofer Institute for Telecommunications, Heinrich Hertz Institute, HHI and Technische Universität Berlin have now developed a technology, Layer-wise Relevance Propagation (LRP), which renders the AI forecasts explainable and in doing so reveals unreliable problem solution strategies. A further development of LRP technology, referred to as Spectral Relevance Analysis (SpRAy), identifies and quantifies a broad spectrum of learned decision-making behaviors and thus identifies undesirable decisions even in enormous datasets.

Transparent AI

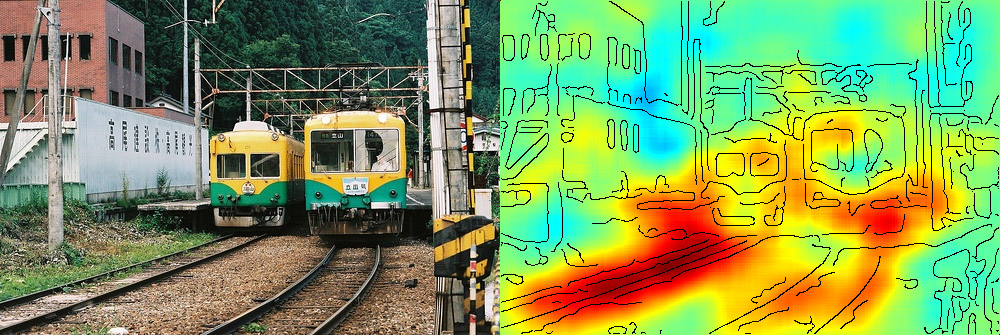

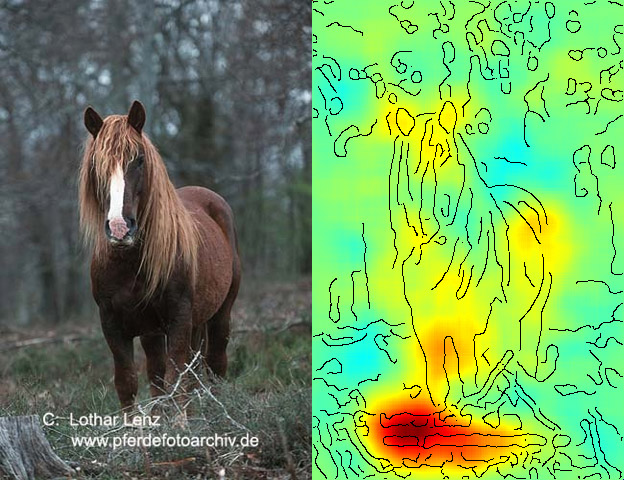

In practice the technology identifies the individual input elements which have been used to make a prediction. Thus for example when an image of a tissue sample is input into an AI system, the influence of each individual pixel is quantified in the classification results. In other words, as well as predicting how “malignant” or “benign” the imaged tissue is, the system also provides information on the basis for this classification. “Not only is the result supposed to be correct, the solution strategy is as well. In the past, AI systems have been treated as black boxes. The systems were trusted to do the right things. With our open-source software, which uses Layer-Wise Relevance Propagation, we’ve succeeded in rendering the solution-finding process of AI systems transparent,” says Dr. Wojciech Samek, head of the "Machine Learning” research group at Fraunhofer HHI. “We’re using LRP to visualize and interpret neural networks and other machine learning models. We use LRP to measure the influence of every input variable in the overall prediction and parse the decisions made by the classifiers,” adds Dr. Klaus-Rob-ert Müller, Professor for Machine Learning at TU Berlin.