In pursuit of hearing

Under the threat of drones

Drones caused more than 120 obstructions at German airports during 2019. With increasingly regularity, airports are disrupted, flights are prevented from taking off and landings are delayed. These small unmanned flying machines go largely undetected by conventional radar systems. All the more important, then, to introduce new approaches to reliably monitoring the air space over airports, in order to minimize the hazards posed to aircraft and their passengers. The Fraunhofer Institute for Digital Media Technology IDMT in Oldenburg has developed a mobile sensor system, which equipped with eight microphones can localize even three-dimensional noises, for use at airports.

The detection system is based on an “acoustic fingerprint”, a specific pattern within the acoustic signal that is identified by a diverse range of drone models and stored in a database. Sound recordings are taken of various drones over a 25-hour period. This is enough to ensure that even drones never “heard” before are reliably identified.

The biggest challenge facing the researchers at the Hearing, Speech and Audio Technology branch is background noise — the noise aircrafts make as they take off and land, for example. To localize the drones in real time, the sensors make use of the effect that sound travels slowly in air and hence arrives at the various microphones with a time delay. This is how the exact position in space can be calculated.

Who can still believe the spoken word?

Disinformation and fakes are representing a great menace. Is the audio recording genuine? To expose audio manipulation, scientists at the Fraunhofer Institute for Digital Media Technology IDMT are making use of forensic audio analysis: Special processes detect traces left by recording and editing and, as a result, are able to provide information about the creation and processing of the content.

Many a sound recording contains, for example, a humming that exposes characteristic fluctuations and is due to supply and demand changes within the electrical power supply system over time. If this humming shows “glitches” or does not match the claimed recording time, these can be indications of modifications. Beyond that, there are many other forensic approaches, including tools for detecting partial overlaps to identify reuse, which is very common to fake creation.

“Ultimately, forensic audio analysis is a cat-and-mouse game”, says Patrick Aichroth, who leads the team of experts responsible for the technology at Fraunhofer IDMT. “There are innumerable ‘attack variants‘ and any new type of attack requires new detectors to be developed.”

In Digger, a joint research project of Deutsche Welle and the Greek company ATC, the audio forensics technologies are being adapted for journalistic workflows and being integrated into the content verification platform TrulyMedia. In the future, this will provide journalists with further possibilities to detect disinformation.

Auralization improves the shopping experience

Corona times are record-breaking times for online retail. For customers, internet shopping is becoming increasingly convenient — and now also increasingly sensual. To ensure the best possible appeal of products, we should also consider their sounds. The Fraunhofer Institute for Digital Media Technology IDMT in Ilmenau is developing a revolutionary software solution for this very purpose.

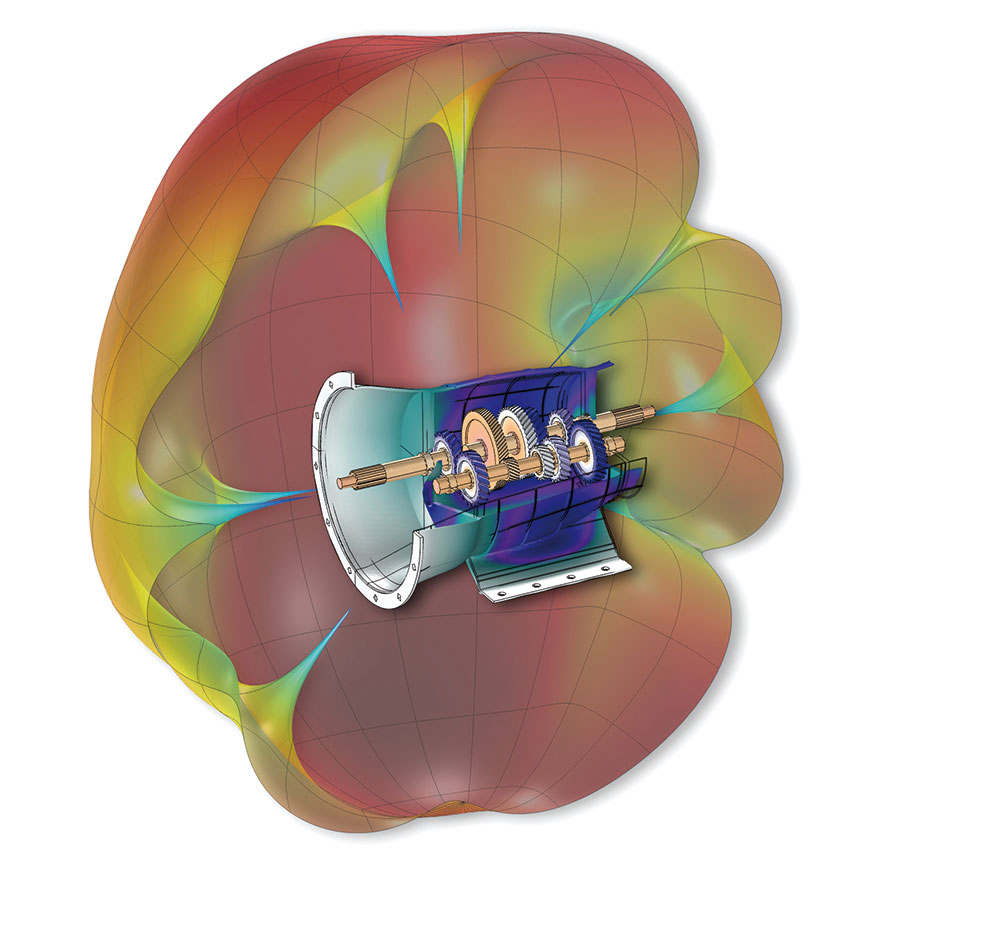

The Fraunhofer researchers combine spatial simulation data with measurement data in a virtual space to create an authentic sound experience. This process of making something acoustically perceptible is called auralization. It allows us to experience the acoustic behavior of products or components in different virtual environments. Fraunhofer IDMT successfully creates a realistic 3D audio experience. Unlike conventional reproduction methods, this system is able to replicate the acoustic directivity of a virtual object. Based on simulation and measurement data, Fraunhofer scientists generate sound that our senses perceive from the correct direction.

The new software AUVIP is also accelerating the development process. It is now possible to assess both virtual and real prototypes in an understandable way and easily compare them with one another based on their product sound. “For the first time, AUVIP is allowing us to experience the sound of numerical simulation data — long before a real prototype is created,” explains Bernhard Fiedler, the expert in charge at Fraunhofer IDMT. “This makes our tool the perfect complement to virtual product development. It will allow us to experience 3D with our eyes and ears.”

The realistic sound of numerous virtual everyday

appliances: https://auralization.idmt.fraunhofer.de

Connecting co-workers

“Please God, give us ear lids!” Kurt Tucholsky, bemoaning in his book “Castle Gripsholm. A Summer Story”. Of course, we can close our eyes, look away. But we can’t close our ears — until now that is. The Fraunhofer Institute for Digital Media Technology IDMT in Oldenburg is using AI-supported technology to develop intelligent ear protection the size of an earbud.

Blind source separation algorithms are already capable of differentiating noise from speech. A hearable will then be able to tune speech and background noise to one another in such a way that the spoken word remains intelligible and the ambient noises are audible, but not too loud. For natural sound in the ear, sound will also be reproduced in the correct direction in a way we can perceive. As a result, the orientation in the room will be preserved.

The Fraunhofer scientists envisage the device being used in places such as noisy working environments, loud workshops for example. In such applications, the hearable is also able to connect together co-workers who may, for example, be situated on different sides of a machine and cannot hear each other at all.

Understanding TV better

It’s Sunday. The popular series “Tatort” is showing. It’s a viewership record. A good fifth of viewers find listening difficult because their hearing is impaired. A recurrent theme of complaints made to the TV stations is that music and background sounds are too loud and the speech being broadcast too faint. Researchers at the Fraunhofer Institute for Integrated Circuits IIS have therefore developed an AI-based solution that makes dialog more intelligible — and alleviates this persistent annoyance that plagues some viewers.

An algorithm examines the audio material and distinguishes between speech and sounds, ambience and music. The AI detects when someone is speaking, separates dialog from background noises and reduces the latter if they are too loud. In a study in cooperation with a public service broadcaster, 2000 viewers were asked about the new, more intelligible sound mixing.

Even the younger interviewees liked the idea of being able to choose between two audio tracks (normal mix and Dialog+). 46 percent of all those interviewed preferred the more intelligible audio track, only one fifth the original mix.

If robots had ears

Welding is becoming increasingly automated — body construction in the automotive industry is already almost 100% automated. However: An experienced welder hears whether they have successfully produced a seam or weld spot during the welding process itself. Robots lack this acoustic response — until now.

Researchers at the Fraunhofer Institute for Digital Media Technology IDMT in Ilmenau are working on a “sensical” solution. The Fraunhofer scientists are forerunners in establishing artificial intelligence on the basis of acoustic sensor data and machine learning algorithms — especially when it comes to data on airborne sound.

In the AKoS project, sponsored by the BMBF, a microphone was mounted to the arm of a welding robot just a short distance away from the electrode. Acoustic abnormalities are recognized immediately, the production process can be stopped and rectified as required.

The researchers at Fraunhofer IDMT have already tested the technology on the final inspection of electrically adjustable vehicle seats, in order to test installed servomotors in a non-destructive manner.

How a car hears sirens

In autonomous driving, computers conduct an entire orchestra of sensors — the new first violin: The acoustics. Modern cars see pretty much everything — with six cameras, four radars and a LIDAR, which works with a light beam instead of radar beams. They do not hear a thing. Instead, with increasing comfort and constantly improving acoustic insulation, vehicle occupants are perceiving less and less noise from their surroundings.

And to realize the “hearing car”, researchers at Fraunhofer Institute for Digital Media Technology IDMT in Oldenburg are developing AI-based technologies that can recognize individual acoustic events. The idea is that a sensor will be able perceive, categorize and localize background noises in a split second. This would enable future autonomous guided vehicles to move out of the way of their own accord whenever a siren approaches. The system makes sense even for vehicles steered by the human hand: It could make the driver, isolated from the outside world, aware at an early stage by a message in the head-up display.

The AI-based acoustic sensor system developed by the scientists at the Hearing, Speech and Audio Technology branch comprises acoustic sensors, computing units and modular software components. Besides sirens, it could also detect children playing on the side of the road, a cyclist ringing their bell or a train approaching an unguarded railway crossing.

A fast reaction can save human lives. At the same, the AI-based acoustic event recognition system developed by Fraunhofer IDMT helps localize all manner of sound events.

Human hearing is extremely individual

Three questions for Bernhard Fiedler, Fraunhofer IDMT in Ilmenau

What for you personally is especially fascinating about digitizing the senses?

As an acoustician, I’m intrigued by how we can understand human hearing and digitize it in models. The more precise this model is, the more realistically a digital sound scene can be reproduced.

What are you hoping for in terms of a result?

I hope that in the future we will be able to achieve an artificial acoustic immersion that can barely be distinguished from reality. This will go a long way towards being able to better simulate, understand and ultimately modify everyday sources of noise. After all, lower noise emissions protect our health as well as the environment.

What is it that makes human perception so unique in comparison to technology — and where will technology play to its strengths?

Human perception is not only highly adaptive, it’s also highly individual. When it comes to how we hear for example: What sounds pleasant for one person can be annoying for another. At this point, I believe that AI-based technologies, for instance, are better at objectively evaluating everyday things than humans.

Intelligent ears for our daily lives

Three questions for Dr. Jens-E. Appell, Fraunhofer IDMT in Oldenburg

What for you personally is especially fascinating about digitizing the senses?

For me as a hearing researcher, the way it helps people is a key aspect. The true fascination here is, how technology can support the human sense of hearing that has evolved superbly well over time. Conversely, we can also teach machines how to hear, allowing them to acoustically detect themselves and their environment, identify malfunctions or be voice-controlled for instance.

What are you hoping for in terms of a result?

I am convinced that AI-supported hearing systems will significantly change the way we communicate with each other and interact with our environment, and that these “intelligent ears” will in the future be part and parcel of our everyday routine — be that in industry, mobility, health care or in our private lives. They will acoustically detect speech or dangerous situations and mask out unwanted noises. They will perceive our environment and our health, warning us as required, and provide us with information about areas of interest.

What is it that makes human perception so unique in comparison to technology — and where will technology play to its strengths?

Of all the senses, our sense of hearing is perhaps the one most able to instantaneously trigger our emotions. Human beings not only perceive speech per se, they can also make out voices, moods and nuances. It will be a long time before technology is able to convey an emotional connection. Thanks to the use of AI, however, technology is getting better and better at supporting our perception, avoiding unpleasant circumstances such as noise, and bringing important events to our attention.